AI Agentic PPC Optimisation

Trust is the foundation of every relationship. And whether we realise it or not, we are already in a serious one with technology. It is evolving faster than we are and our trust is still catching up, trying to define what it should look like. This project is my exploration of trust in agentic flows.

PROJECT

SellQ — Designing an agentic AI workflow for PPC optimisation (solo exploration).

PROBLEM

PPC data without guidance leads to delays, wasted spend and missed growth.

SOLUTION

Designed an AI-powered agentic workflow that guides users to decisions while keeping them in control.

IMPACT

- Reduced ad spend waste

- ~90% faster task completion

- $100/month savings on third-party tools

- Lower mental load

- Reusable AI+UX trust framework

PPC complexity

Sellers rely on ads to grow their businesses, but many struggle with advertising. Some don’t even know the difference between ACoS and TACoS, let alone how to optimise a campaign.

Third-party tools promise to simplify this, and many now include GenAI features. Sellers are willing to try almost anything if it might help, even paying $100+ per month, yet most tools still deliver mediocre results.

Adtomic burned through my budget in weeks and I had no idea why it made those changes.

The AI kept recommending keywords I’d already tested — nothing new, just wasted spend.

I still have to double-check everything manually because I don’t trust the recommendations.

Diving deep

I wanted to understand how sellers actually think about ads and automation. I dug into:

- Competitor analysis (Adtominc, Helium10, JungleScout)

- Community research (videos, seller groups, Reddit, podcasts)

- Benchmarking & patterns (agentic UX to design for trust)

Key insights

- Sellers are overwhelmed by complexity

- Trust in automation is low

- Sellers want guidance, not blind automation

- One metric isn’t enough

PPC tools often show an overwhelming amount of data without clear prioritisation.

Existing tools act like black boxes; they make changes but rarely explain why.

The magic formula is transparency + choice. People want to understand tradeoffs, preview changes and approve actions.

Tools that optimise only for ACoS miss the bigger picture. Sellers want multidimensional insights.

Hypothesis

bookmarkAgentic workflow optimised for trust and transparency reduces decision fatigue, improves outcomes and keeps sellers in control.

Seller & Business needs

Seller needs

- Explains the 'why' behind the recommendation

- Highlights high-impact opportunities

- Make faster, simpler decisions without losing detail

- Stay in control with explicit approvals and transparency

Business needs

- Speed up seller decision-making

- Improve ROI with smarter optimisation

- Build trust in AI-driven tools

- Boost retention by making tools indispensable

North star metrics

- Faster actions (~80% faster)

- Increased trust (CSAT > 80%)

- Adapted recommendations (65–75%)

- Gained efficiency (TACoS +8–10%)

Agentic UI Principles

I focused on keeping sellers in control, making the why behind recommendations clear and leveraging the native Marketplace UI to guide them without adding friction.

I defined these principles around user needs first, then validated them using agentic AI research.

| Principle | Validated by research |

|---|---|

| Co-planning before acting | ReAct + Planner–Executor → the agent shows its plan first and explains why before doing anything. |

| User-in-the-Loop control | Human-in-the-loop UX + Magnetic UI → sellers approve actions and the agent highlights relevant UI elements for clarity. |

| Self-Reflection & Recovery | Reflexion → the agent reviews past suggestions and recommends fixes if performance drops. |

| Portfolio-Level multi-tasking | Multi-Agent Chains → the agent can manage multiple campaigns at once while surfacing the highest-impact actions first. |

| Context-Aware, Non-Intrusive design | Copilot UX research → the agent stays in its own panel and doesn’t interrupt existing workflows. |

| Design for trust and clarity | Transparent Agentic UI + Action-Oriented UI → recommendations are explained, evidence-based, and focused on clear next steps. |

Strategic constrains

Scope constraints:

- Focused on a small set of PPC intents

- Designed only one happy path per intent

- Adapted responses for a mid-level seller familiar with PPC language

- Reused existing Marketplace dashboard components

- Planned accessibility and adaptability work for post-MVP

Prioritisation choices:

- Skipped full tone/voice refinement process

- Simplified all reports in the portal

Agent flows

Designing AI agents is about building trust. I used my agentic design principles to make sure recommendations feel transparent, collaborative and not pushy.

Onboarding to PPC Campaign

Sellers struggle to know where to start with PPC, often fearing wasted spend. I designed the onboarding flow around co-planning and transparency:

- The agent surfaces missed opportunities using the seller’s data and sets realistic expectations.

- Each recommendation explains the “why” and requires seller approval, keeping automation clear and optional.

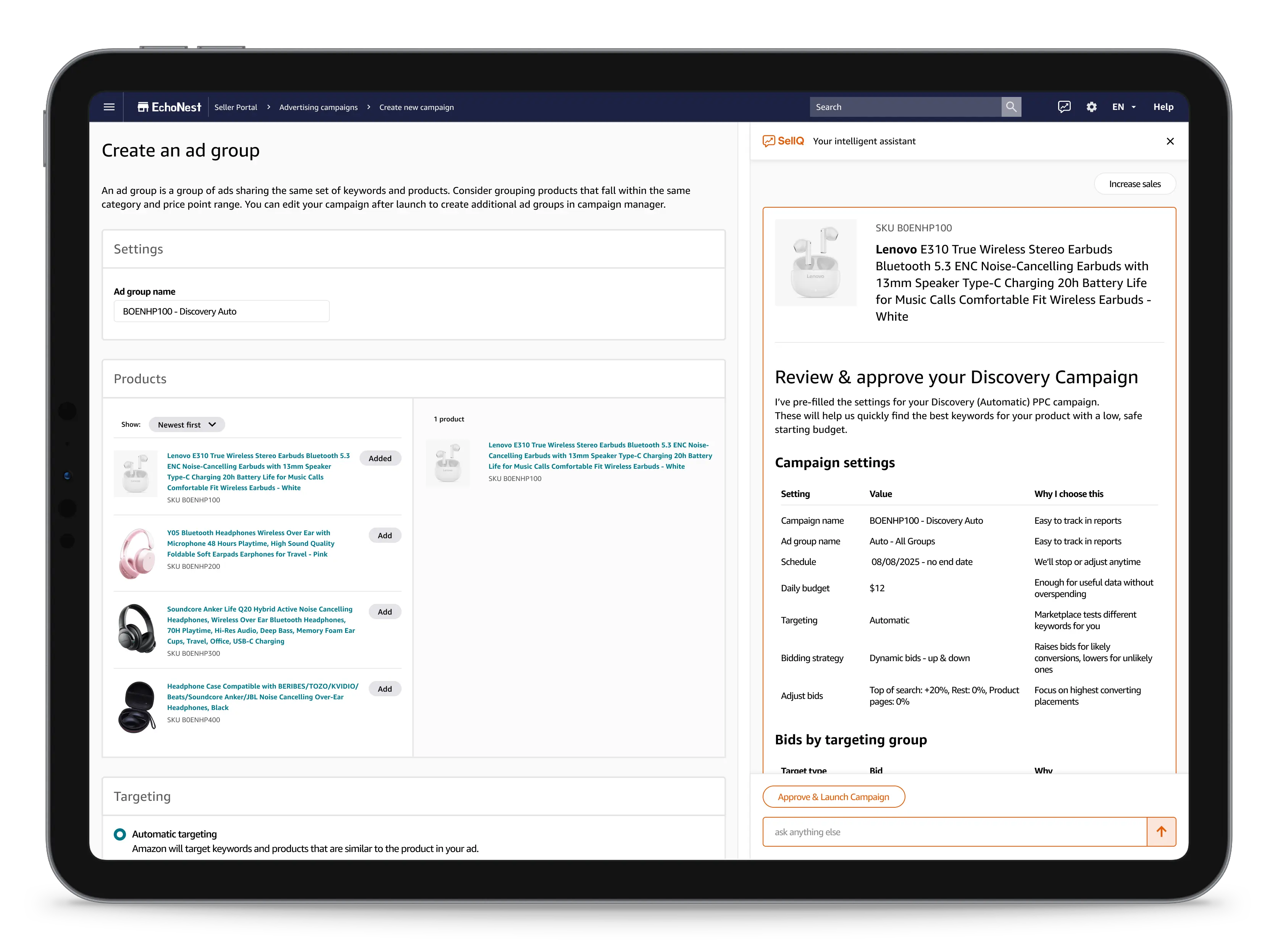

Creating Discovery Auto Campaign

Auto campaigns can feel like a black box, making sellers hesitant to trust automation.

- The agent confirms business goals upfront and adapts recommendations accordingly, making automation collaborative, not prescriptive.

- Setting is explained in plain language, with full flexibility to override choices or ask follow-up questions.

Keywords harvesting

Sellers are unsure how to interpret keyword results, making optimisation time-consuming and risky.

- The agent acts like a consultant, showing its work step by step and explaining why each keyword is recommended or discarded.

- Sellers can interrupt anytime to ask follow-up questions, tweak numbers or override choices.

Biweekly reports

Sellers need clear visibility into campaign performance and guidance when things go wrong.

- The agent delivers biweekly reports, timed to match when PPC data stabilises on the marketplace.

- When results miss expectations, the agent is fully transparent and proposes a guided recovery plan.

Urgent issue handling

Some issues can’t wait for the biweekly report, like wasted spend, stalled conversions, or hitting budget caps.

- The agent flags urgent problems instantly and explains what’s happening and why in plain language.

- Sellers get a clear recovery plan, with step-by-step guidance to act quickly without losing control.

System guardrails

Automation always creates risk (overspending, biased results, errors). These guardrails protect sellers behind the scenes before any recommendation reaches them:

- Safety Nets

- Transparency

- Fairness & Accountability

- Privacy & Context

Budget caps, policy scans, data freshness

Always show “why” + preview of impact

Bias audits + recovery steps

Seller-only data + category signals

Designing trust

I complemented the backend guardrails with front-end trust patterns so sellers could see, understand, and control automation:

Tone

- Clear, plain-English

- Concise

- Tailored to user data and history

Ai-Ready prompts

- Structured

- Transparent

- Respecting guardrails

Failure handling

- Honest about confidence level

- Accountable

- Quick to recover

Impact

By reducing manual steps, clarifying recommendations and building trust into automation, I predicted big gains for both sellers and the business.

Seller impact

- ~90% faster tasks

- Lower mental load

- Full seller control

- Save ~$100/month on 3rd party tools

Business impact

- +5–10% more sales

- Reduce wasted spend

- Scalable AI+UX trust pattern

- Reusable framework across workflows

Key learnings

This was a one-week sprint, so I optimised for speed and focused on proving value fast.

Since then, I’ve deepened my understanding of agentic workflows and would approach future iterations differently:

- Explore inline recommendations

- Refine context-awareness

Test surfacing suggestions directly in the seller’s flow instead of a side panel.

Improve continuity across chat history, actions, and recommendations.

Next steps

- Validate user interactions → observe how sellers engage with recommendations, approvals, and inline guidance to refine the workflow.

- Test tolerance for multi-tasking → Explore how ready sellers are to trust the agent to execute multiple actions across campaigns simultaneously.